When AWS first approached us about sponsoring the AWS Sydney Summit 2017 we, of course, jumped at the opportunity. We decided we wanted to showcase something unique that grabbed people’s attention and highlighted the value of the AWS platform. The outcome? A Rube Goldberg machine, take something simple and make it as unnecessarily complex as possible.

|

A bit of team brainstorming and the Joke Machine (a.k.a The Universe’s Most AAAmazing AI) was born. The machine would take a picture of someone’s business card, pick a joke for them, tell them the joke and tell them if they laughed at the joke, all powered by bleeding-edge buzzwords: AI and serverless architecture. |

Step 1: The User Journey

To begin with, we mapped out the user journey starting from them walking up to our booth:

- Take a picture of someone’s business card

- Run it through image analysis to find some distinct features. Our hypothesis: using your business card, we can determine if you are going to find a joke funny or not (this is based on actual science).

- Our amazing AI picks a joke for you based on your business card

- We analyse your face while telling you the joke

- Our AI tells you if you found the joke was funny

- Finally, you can rate the performance of our AI, training it further.

Step 2: De-risking

Before we jumped into it, we identified 2 key risks that could have derailed our plan for building the world’s most AAmazing AI:

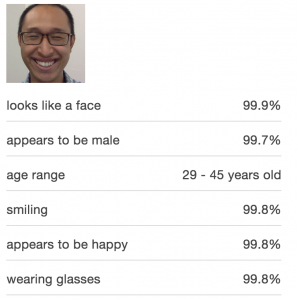

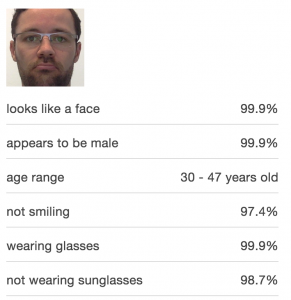

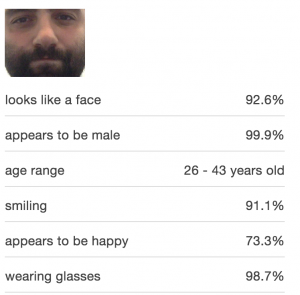

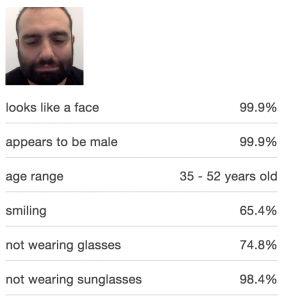

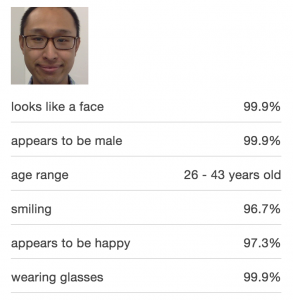

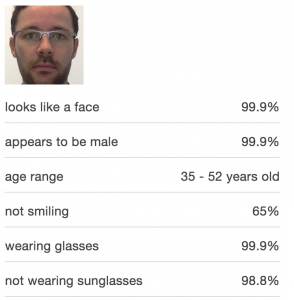

First stop was to experiment with AWS Rekognition, we wanted to make sure we can easily and accurately detect when someone is smiling. After using AWS’ demo, although there were some inconsistencies (e.g. always thinking I was smiling, no matter what face I pulled), we were fairly confident we could do what was needed.

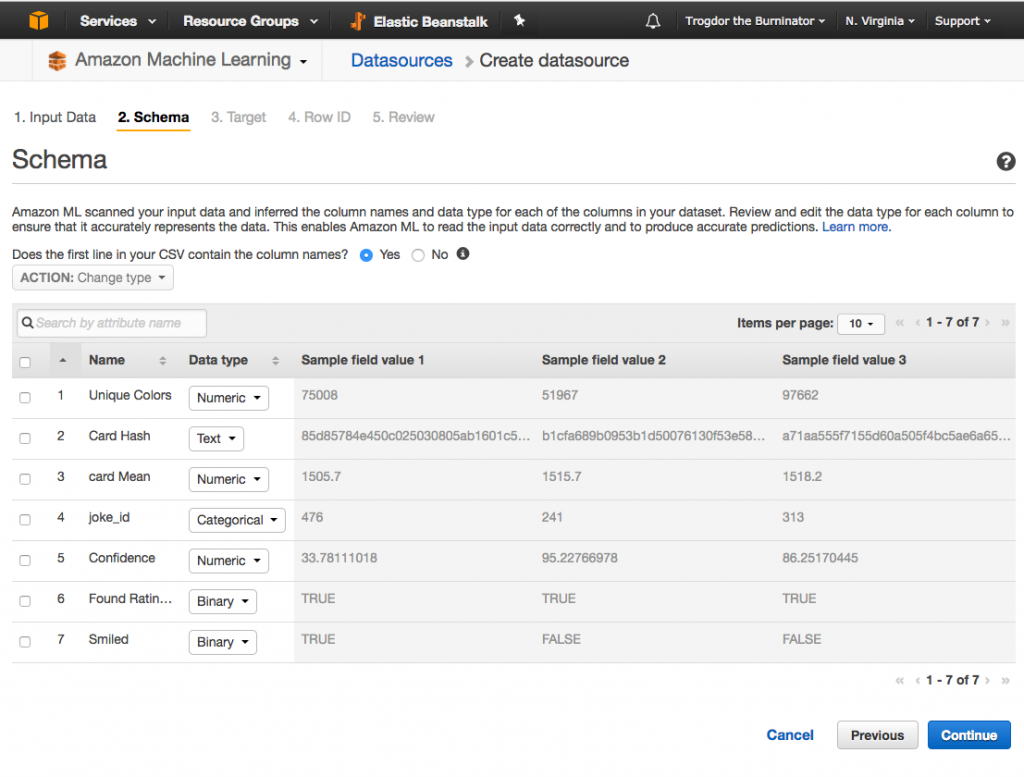

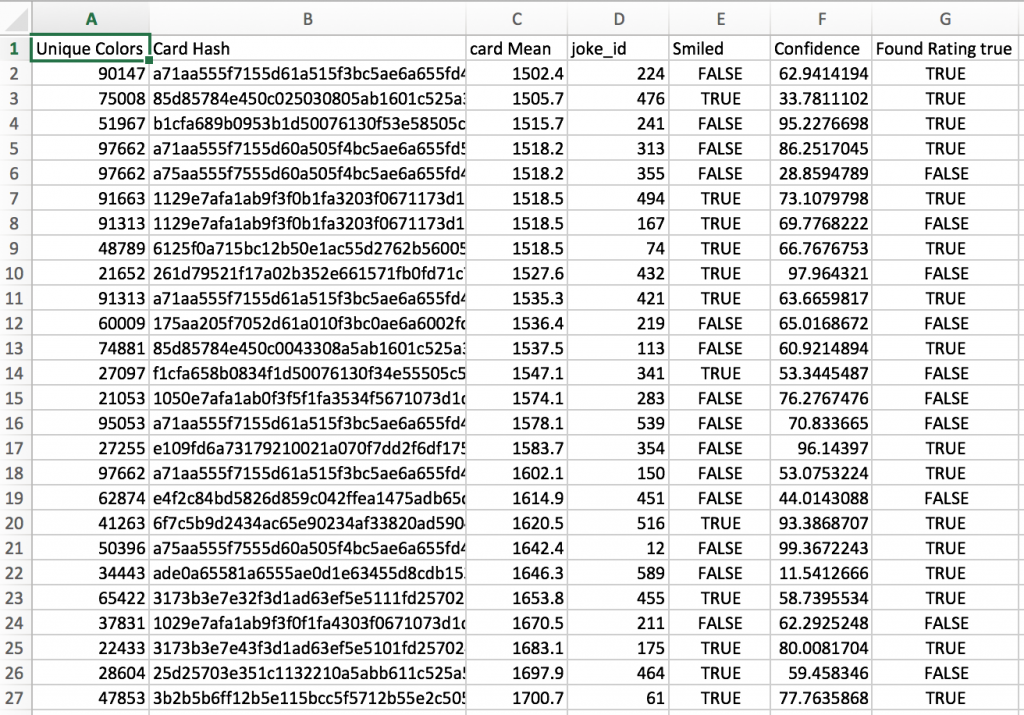

The next bit was to build the machine learning. We simply wanted to ask: for a given business card can you pick a joke where the person smiles with a confidence of 0.9. We started with designing our data structure

Finally, we needed some data to train our AI, so we used a tested and proven scientific method: make it up.

Now that we were confident we could use AWS Rekognition and AWS Machine Learning, we went back to the beginning and started building out our API while our designer created some mock-ups.

Step 3: Build

We wanted to take a serverless approach so decided to use AWS API Gateway and AWS Lambda. The frontend would take a photo, post it to AWS API Gateway, which would, in turn, trigger a simple Lambda function that would:

- Analyse the metadata of the photo using ImageMagick

- Submit metadata to AWS Machine Learning

- AWS Machine Learning would return a joke id

Next step was to display the joke to the user and take a photo of their face as they are reading the joke. This is where we ran into a small problem, we had no real way to determine when the photo should be taken, we explored a few ideas like taking multiple photos and creating a score out of their results. However, at the end, we settled on a much simpler solution: break the joke into 2 parts and take a photo of the user after displaying the punch line. It worked well enough for what we were trying to achieve.

We then submitted this photo to another Lambda function, that would:

- Upload the photo to S3

- Use Rekognition API to detect faces and facial features

- Return smile

We now had a way to: take a picture of someone’s business card, analyse it, pick a joke using machine learning, display the joke and determine if the person was smiling.

The last step was to display this to the user and get their feedback. Rekognition would tell us if it detected a person smiling in the photo and the confidence level of its detection. We displayed this to the user and asked them to rate the performance of our AI by either agreeing or disagreeing with the results. The user’s feedback was then submitted back to AWS ML to further train the AI.

And that was it. Here it is, in all it’s glory, The Universe’s Most AAmaazing AI in action:

All in all the engineering part of the world’s most amazing AI took about 2-3 days to build. It gathered a lot of attention during the AWS Summit and it was a great way to show how simple it is to quickly create powerful prototypes using AWS services.

In the end, the toughest challenge was finding enough conference appropriate jokes.